AI That Builds AI: Crafting Your Own Agent Factory

From Concept to Code: How I Built an AI System That Creates Custom Agents (And How You Can Too)

Welcome to AI Weekly Challenge!

As promised in last week's post, I'm kicking off our new "AI Weekly Challenge" series where we'll be building practical AI solutions from scratch each week.

For our inaugural challenge, I decided to tackle something I've been itching to try - building an AI that creates custom agents based on conversations. Perfect first challenge for AI Craftsman - we're literally crafting AI that crafts AI!

What You'll Get From This Post

My entire thinking process (including dead ends)

Detailed final architecture (and the ideas I decided not to pursue)

Working code you can try yourself

Just to remind you what this series is about: every week, instead of just writing about AI, I'm creating something from scratch to test ideas, learn, and share my experience. Why? Because showing how learning the "AI/LLM dev world" looks in practice might be more valuable than theoretical explanations.

Why This Idea?

I've been seeing all these startups raising millions for agent platforms. And it got me thinking: how hard is this actually? So I built one using LangGraph 🤘 Simple as that.

Well, not exactly simple, but definitely doable in a week - which says something about the state of the AI tools ecosystem right now.

My Thinking Process

To be honest, at the beginning I was thinking about making a self-adaptive agent that would change itself according to the conversation. I even started this week's project as a "chameleon agent." But the longer I thought about this, the more options I saw, and it started to become overwhelming. (That's how I lost 2 days! 😅)

Then I pivoted to the idea of "AI that creates AI." I figured out 2 options to accomplish that:

Option 1: AI That Writes Executable Code

Pros:

Extremely flexible - could create any type of agent

More impressive demonstration of capabilities

Cons:

Too many potential failure points

Would require complex dependency management

Agency frameworks aren't well documented, making it difficult for an LLM to reliably generate working code

Option 2: AI That Outputs Agent Blueprints

Pros:

More controlled environment

Could still demonstrate adaptation while reducing errors

Easier to visualize and understand what's happening

Cons:

Less flexible than dynamic code generation

Would require pre-defining components the AI could use

I chose Option 2, specifically creating an agent that defines configurations for LangGraph-based agents. Why?

Reliability: Graph agents tend to be more stable and predictable

Visualization: We can actually see the agent structure evolve

Control: Better balance between flexibility and guardrails

The Architecture: Building an Agent Factory

The system I built has two main components:

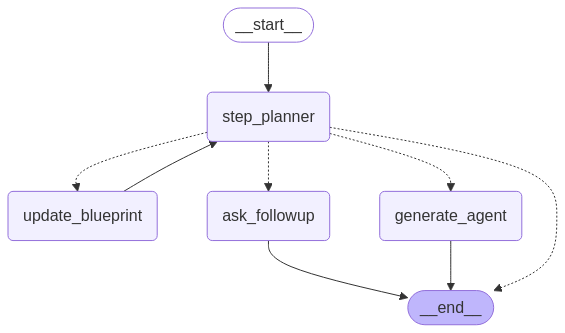

The Core Agent Creator: This is a LangGraph agent that:

Takes user requirements about what kind of agent they want

Asks follow-up questions to clarify the requirements

Creates a blueprint for an agent (nodes, edges, objectives)

Generates the actual agent from that blueprint

The Generated Agents: These are custom LangGraph agents that:

Are created from the blueprints produced by the Core Agent

Have their own nodes, edges, and state management

Can be saved, loaded, and used independently

Here's the basic architecture of the Core Agent Creator:

The magic happens in the `generate_agent` function, which takes a blueprint and creates a functioning agent:

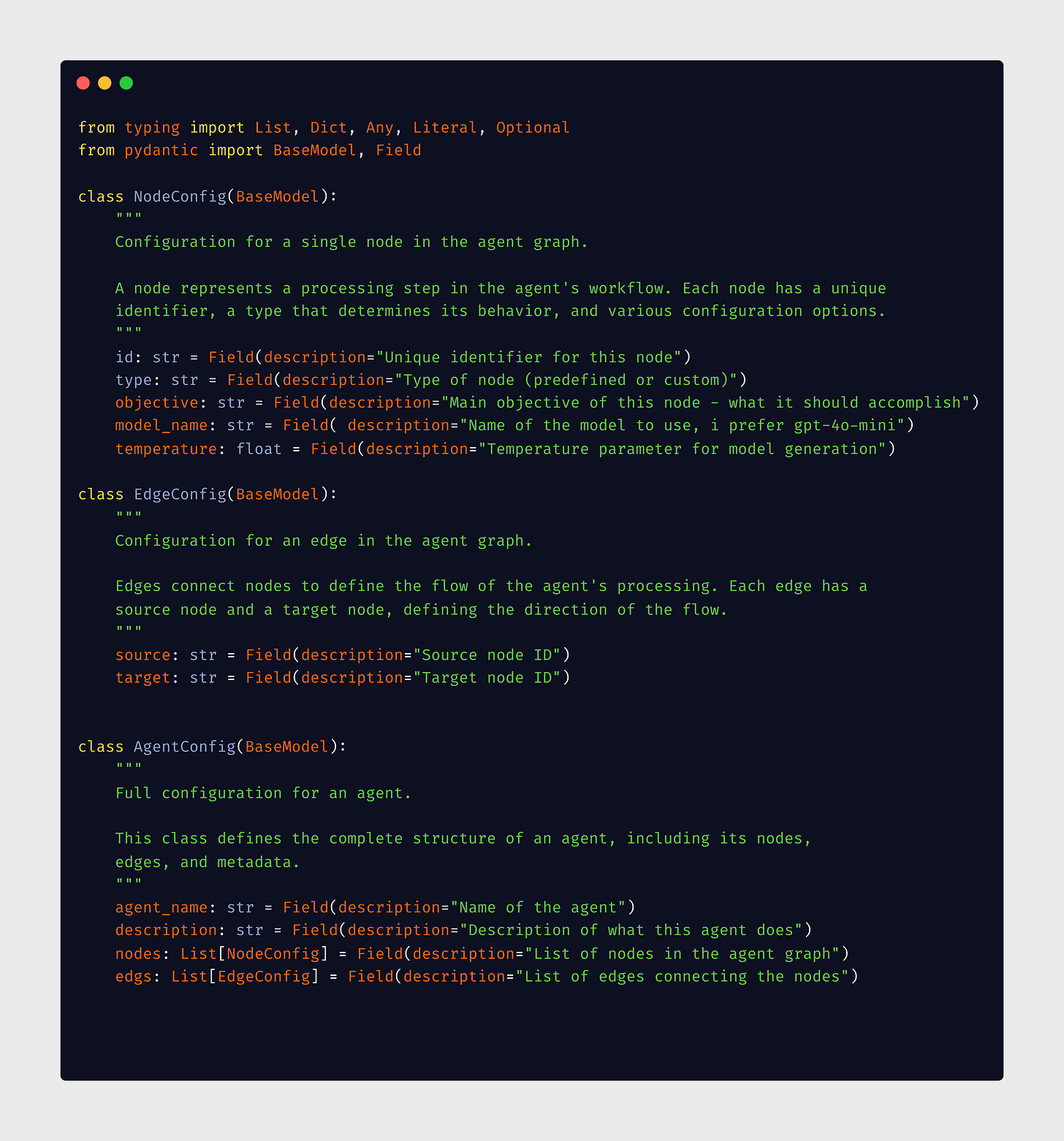

The blueprints themselves are structured using Pydantic models, which enforce type safety and validation:

Challenges & Interesting Observations

Building this agent factory revealed several fascinating insights:

1. OpenAI's Hidden Guardrails

One of the most interesting discoveries was how OpenAI's built-in guardrails limited what kinds of agents could be created. Even when explicitly instructed, the model refused certain types of configurations that might lead to potentially harmful behavior.

This actually turned out to be a benefit - it prevented potentially problematic agents while still allowing creative and useful ones.

2. Graph Planning Complexity

While LangGraph itself works great, I discovered that LLMs struggle significantly with planning and architecting graph structures properly (I didn’t want to rely on reasoning ai for this project). The models have difficulty:

Designing coherent flows with proper edge connections

Understanding the logical sequence of operations in a graph

Creating consistent node relationships that make sense at runtime

This was one of the most challenging aspects of the project - getting the AI to produce valid graph architectures that would actually work when executed.

3. Structured Output Requirements

Getting the AI to generate valid, coherent agent blueprints highlighted a critical requirement: structured output is essential for these kinds of tasks. I observed some important differences:

Closed-source models (like OpenAI's) handle structured output requirements quite well

Open-source models struggle significantly with maintaining strict output formats

Integration challenges: Structured output isn't just nice-to-have; it's absolutely critical for integration with any working IT infrastructure

The solution was to implement strong validation and provide clear structure through the Pydantic models, but this revealed a gap in capabilities between different types of models that anyone building similar systems should consider.

🎬 Results: The Agent Factory in Action

Potential Applications & Extensions

This agent factory concept has numerous potential applications:

Personalized assistants - Create custom agents for specific tasks without coding

Rapid prototyping - Quickly test different agent architectures before implementation

Educational tools - Teach people about agent design through interactive creation

AI development democratization - Make agent creation accessible to non-developers

💡 It's important to note that this challenge was primarily focused on making a working agent factory system rather than implementing a wide variety of node types. The core functionality was to demonstrate how an AI could generate functioning agent architectures based on user requirements.Future extensions could include:

Tool integration - Allow the factory to create agents that use external tools and APIs

Agent collaboration - Generate systems of multiple agents that work together

Self-improving agents - Combine this with my original chameleon idea for agents that modify their own blueprints

Expanded node types - Add more specialized node types like calculator functions, web scraping capabilities, email sending functionality, etc., to increase the versatility and practical applications of the generated agents

Conclusions & Lessons Learned

Building this agent factory taught me several important lessons:

Structure matters more than freedom - When it comes to AI-generating-AI, providing strong guardrails and structure leads to more reliable results than complete freedom.

Validation is essential - Never trust the output of an LLM without validation, especially when that output will be executed or used to create another system.

The sweet spot is automation + human oversight - The most effective approach is having the AI do the heavy lifting of creating complex configurations, while humans provide direction and final approval.

LangGraph is powerful but has a learning curve - The graph-based approach offers great control and visibility, but requires careful design to ensure coherent agent behavior.

Try the code yourself—repo link here.

If you build something cool, let me know in the comments!

Until next week, happy crafting! 👋

This exploration of agent architecture reminds me of the tension between automation and human decision-making I've been exploring in my work. The choice between AI that writes executable code versus outputting agent blueprints mirrors what I've seen in e-commerce implementations - sometimes a constrained system with guardrails produces more reliable outcomes than complete flexibility.

The observation about OpenAI's hidden guardrails is particularly interesting from a practical development standpoint. In my recent experiments with AI tools for business applications, I've found similar constraints that initially seem limiting but ultimately create more robust solutions.

I've shared some further thoughts on finding this balance between AI capabilities and practical implementation at thoughts.jock.pl/p/ai-hallucinations-creative-innovation-framework-2025 for anyone interested in this direction. Solid work mapping out these implementation challenges.